… no, were not talking about the kind of fun that burns when taking a leak. In this article I want to summarize what I learned over the past weeks about working with Universal Type Identifiers.

On Windows files where always only identified by their file extension. In the olden days Apple was using multiple additional methods of determining what to do with certain files, amongst them HFS codes and MIME types.

The modern way to deal with file types is to use UTIs which are typically a reverse domain name, like “public.html” for a generic HTML file or ”com.adobe.pdf” by the PDF type created by Adobe. UTIs have an additional advantage that other methods of identifying types do not possess: a file can actually possess multiple types.

A PDF file for example conforms to the following UTIs in addition to the “com.adobe.pdf” one: public.data, public.item, public.composite-content, public.content. For comparison HTML is also public.text, public.data, public.item, public.content. So you see that these widely different file types have some things in common. This is a form of “multiple inheritance”.

Apple maintains the public.* domain as sort of the basic building blocks of the UTI system and we developers can base our own types on the system-declared UTIs. That an UTI “inherits” from other UTIs is called “conformance”. Let’s take the example of HTML. The public.html type conforms to public.text, which has the effect that those files can also be opened by applications that know how to deal with public.text files. Because of this inheritance you only need to declare the immediate super-class for a new UTI, the lower more generic UTIs will get inherited as well.

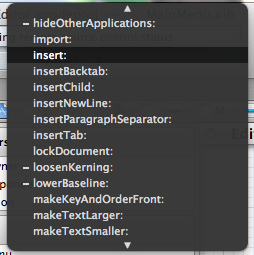

A Tale of Two Hierarchies

There really are two conformance hierarchies on the system: One is physical, like is the file a bundle or a single file. The other is functional, is that an image or a address book contact. Apple has this nice chart in their UTI Overview

![physical_vs_functional]()

It is exactly because of these two hierarchies that we see both the public.item (physical) and the public.contents (functional) UTIs in both HTML and PDF files. Another example would be public.jpeg which conforms to public.image on the functional side (the function is to store an image) and to public.data on the content side.

You can use the mdls tool to inspect the UTIs currently attached to any item in your file system. This is also quite useful to see if your UTI definitions for your custom type are correct.

olivers-imac:Desktop Oliver$ mdls test.html

kMDItemContentCreationDate = 2012-09-26 12:24:28 +0000

kMDItemContentModificationDate = 2012-09-26 12:24:28 +0000

kMDItemContentType = "public.html"

kMDItemContentTypeTree = (

"public.html",

"public.text",

"public.data",

"public.item",

"public.content"

)

kMDItemDateAdded = 2012-09-26 12:24:28 +0000

kMDItemDisplayName = "test.html"

kMDItemFSContentChangeDate = 2012-09-26 12:24:28 +0000

kMDItemFSCreationDate = 2012-09-26 12:24:28 +0000

kMDItemFSCreatorCode = ""

kMDItemFSFinderFlags = 0

kMDItemFSHasCustomIcon = 0

kMDItemFSInvisible = 0

kMDItemFSIsExtensionHidden = 0

kMDItemFSIsStationery = 0

kMDItemFSLabel = 0

kMDItemFSName = "test.html"

kMDItemFSNodeCount = 680

kMDItemFSOwnerGroupID = 20

kMDItemFSOwnerUserID = 501

kMDItemFSSize = 680

kMDItemFSTypeCode = ""

kMDItemKind = "HTML document"

kMDItemLastUsedDate = 2012-09-26 12:24:31 +0000

kMDItemLogicalSize = 680

kMDItemPhysicalSize = 4096

kMDItemUseCount = 1

kMDItemUsedDates = (

"2012-09-25 22:00:00 +0000"

)

I’ve also seen instances where the content type had a dyn.* prefix. Those occur if the system did not have an exact UTI attached to the item, but instead had to derive it from extension, OSType or other so-called “tags”. Say your app encounters pasteboard content of an unknown type. With the help of some utility functions you can derive a dynamic UTI for use with methods that requires a UTI string.

Troubleshooting UTIs gone Bonkers

UTIs get registered whenever you launch an app containing UTI definitions in their info.plist. Because of this there is potential that UTIs get out of synch with installed apps, especially if developers have faulty definitions set up.

Developer Stefan Vogt, having dealt with such UTI troubles himself, found out how you can clean out the UTI database which is being kept by Launch Services. I needed to do that several times on multiple computers. My colleague had experimented with creating a com.drobnik.icatalog123 type using the icatalog extension. A different app that was registering com.drobnik.icatalog was working fine on my machine. On Stefan’s Mac we would get this exception trying to auto-save when he ran mine, both had specify the .icatalog as file extension.

2012-09-27 10:32:15.824 iCatalogEditor[519:303] -[NSDocumentController openUntitledDocumentOfType:andDisplay:error:] failed during state restoration. Here's the error:

Error Domain=NSCocoaErrorDomain Code=256 "The file couldn’t be opened."

2012-09-27 10:32:36.508 iCatalogEditor[519:303] This application can't reopen autosaved com.drobnik.icatalog123 files.

This was driving us crazy for the better part of an afternoon, simply because we didn’t have any com.drobnik.icatalog123 anywhere set up in the app. Looks like OS X was wrongfully inferring this UTI from the extension and then no longer able to deal with the saved file due it having an unknown UTI.

Cleaning out the UTI database fixed the problem (thanks, Stefan Vogt!):

/System/Library/Frameworks/CoreServices.framework/Versions/A/Frameworks/LaunchServices.framework/Versions/A/Support/lsregister -kill -r -domain local -domain system -domain user

Right after this we hit build&run and now the crash no longer occurred.

The whole episode taught us that UTI are quite dangerous because at present there is no mechanism to make sure that UTI registrations are correct. One misbehaving app can mess up another one. In fact there is an Radar for that: rdar://10778913.

Getting It Right

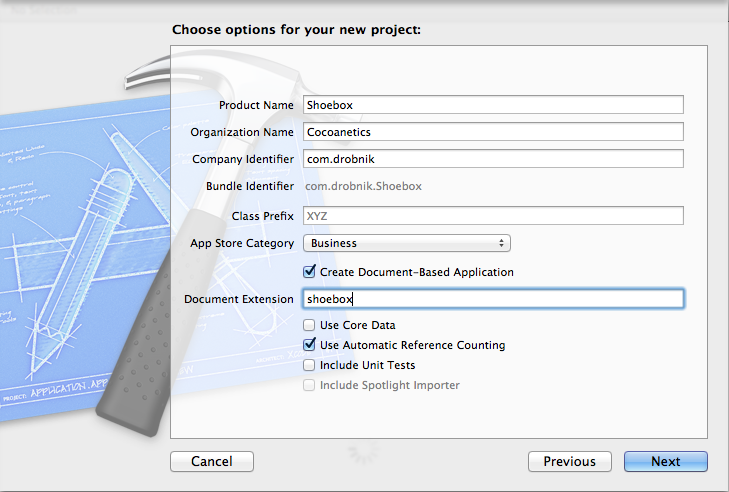

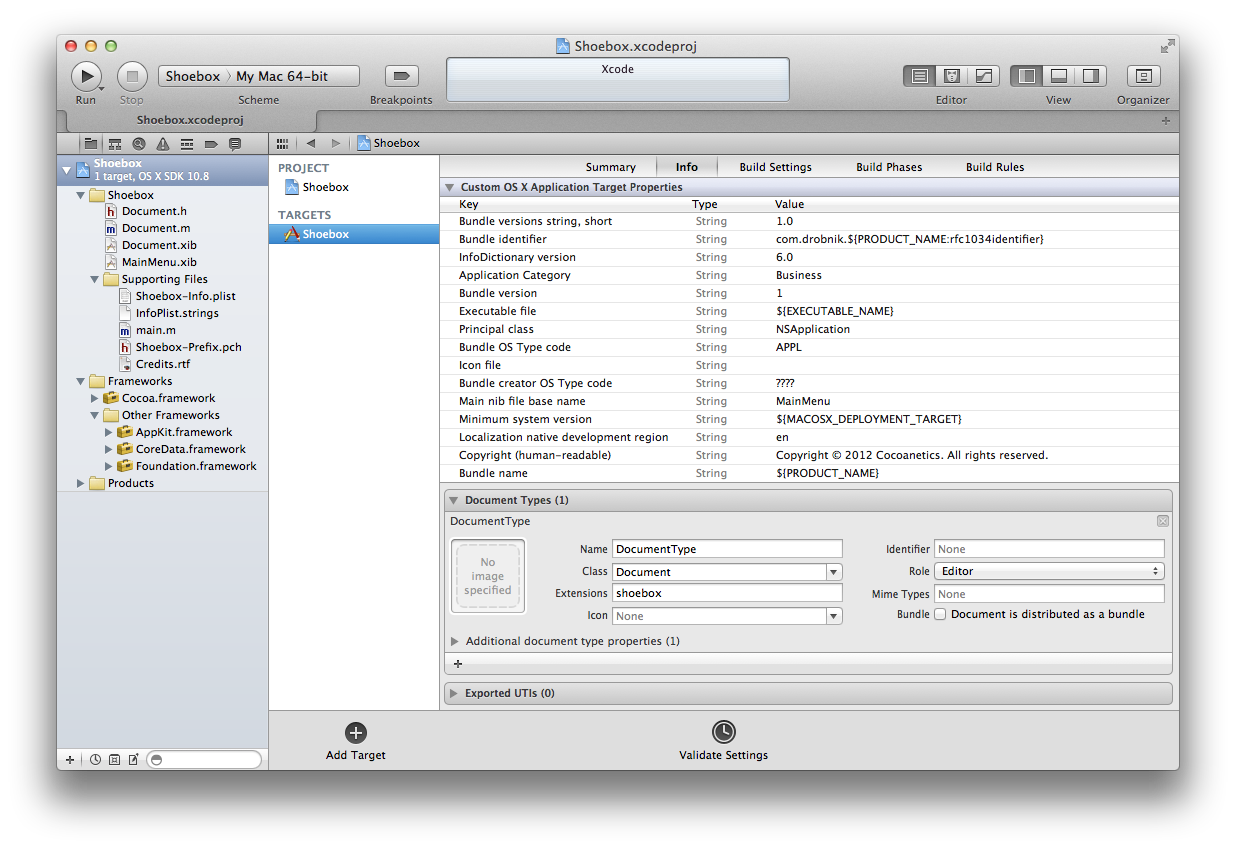

There are 3 sections in an app’s info.plist and their purpose are not quite obvious:

- Document Types – Those are the types of documents that your app is able to handle. When showing a Save panel you get to choose between all these types that have a role of “Editor”. Also these types specify which NSDocument sub class to use to display them. The legacy method would involve specifying OSType, MIME type or extensions. The modern method only references UTIs.

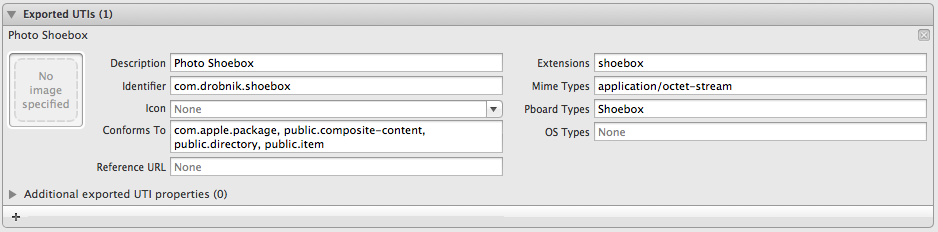

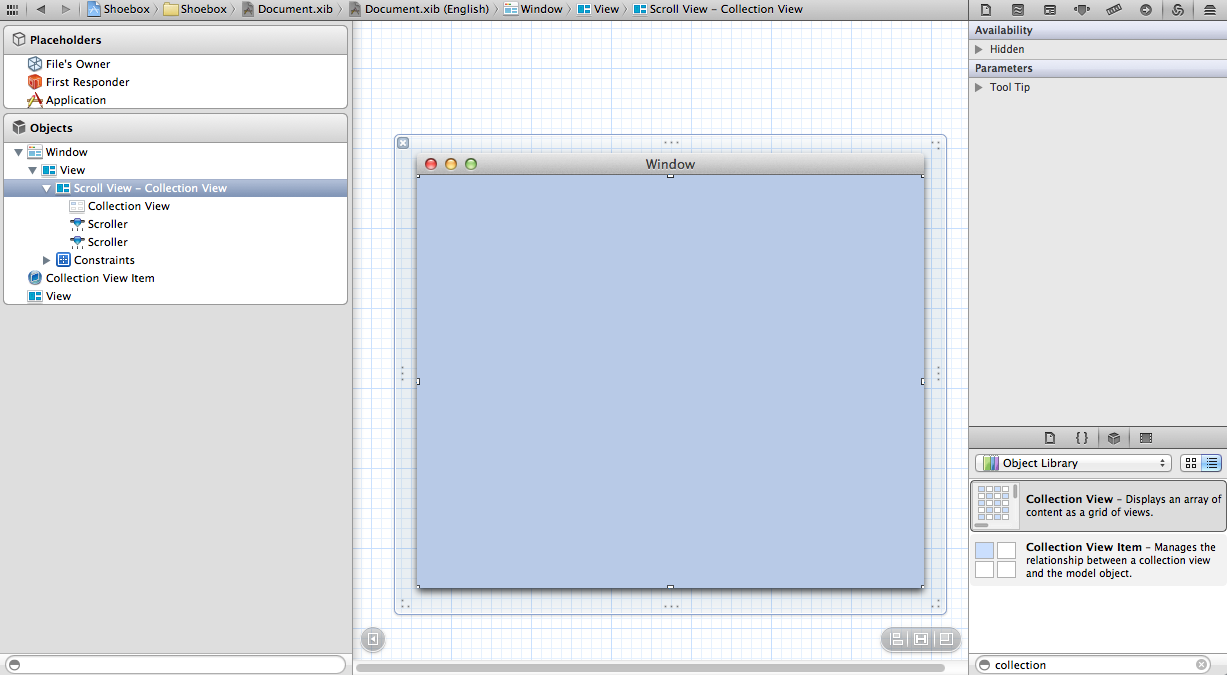

- Exported UTIs - Types which are available for use by all other parties. For example, an application that uses a proprietary document format should declare it as an exported UTI.

- Imported UTIs – Types that the bundle does not own, but would like to see available on the system. For example if your app could read a proprietary format exported by another company’s app then you would want to re-declare it as an imported UTI, because potentially on some systems the other app might not be present (and as such the UTI be unknown) without your import definition.

If the system only sees the imported UTI then this is used. If the exported UTI is present then it takes precedence.

To summarize, you define UTIs as exported or imported and once this is set up you specify the various document types that your app can support referencing these UTIs.

Let’s inspect Apple’s own definition for xcodeproj, ripped straight from the Info.plist in my Xcode’s app bundle. Xcode defines 83 document types, 5 imported and 78 exported UTIs. If anyone then it should be Apple to get it right, right?

<dict>

<key>UTTypeIdentifier</key>

<string>com.apple.xcode.project</string>

<key>UTTypeConformsTo</key>

<array>

<string>public.composite-content</string>

<string>com.apple.package</string>

</array>

<key>UTTypeReferenceURL</key>

<string>http://developer.apple.com/tools/xcode/</string>

<key>UTTypeTagSpecification</key>

<dict>

<key>public.filename-extension</key>

<array>

<string>xcodeproj</string>

<string>xcode</string>

<string>pbproj</string>

</array>

</dict>

</dict>

The Xcode project type as the unique type identifier “com.apple.xcode.project”. It conforms functionally to “public.composite-content”, physically to “com.apple.package”. Then there is a reference URL, a mere bonus without value. The UTTypeTagSpecification key contains additional info how the system can know that it is dealing with project files. The modern way seems to be to only list the acceptable file extensions there.

This UTI definition is referenced by a document type definition.

<dict>

<key>CFBundleTypeExtensions</key>

<array>

<string>xcodeproj</string>

<string>xcode</string>

<string>pbproj</string>

</array>

<key>LSIsAppleDefaultForType</key>

<true/>

<key>LSTypeIsPackage</key>

<true/>

<key>CFBundleTypeName</key>

<string>Xcode Project</string>

<key>CFBundleTypeIconFile</key>

<string>xcode-project_Icon</string>

<key>LSItemContentTypes</key>

<array>

<string>com.apple.xcode.project</string>

</array>

<key>CFBundleTypeRole</key>

<string>Editor</string>

<key>NSDocumentClass</key>

<string>Xcode3ProjectDocument</string>

</dict>

The type extensions echo the same file extensions mentioned in the UTI definition. LSIsAppleDefaultForType is a secret Apple tag that is not documented everywhere and seems to mean that this app is the default default app to deal with these documents.

The LSTypeIsPackage true tells the system that this is a bundle format instead of a plain file. The CFBundleTypeName specifies the name you see for these files if this app is chosen as the default opener. The icon file references a document icon coming from the app bundle. LSItemContentTypes now specifies the possible UTIs that such a document can contain.

Apple defines the role to be None, Editor or Viewer. None is for example used by com.apple.dt.ide.plug-in, you cannot view or edit a plug-in. Editor allows opening and saving of this document type. Viewer will only open it but not be an option for saving.

Finally the document class is the name of the NSDocument class that OS X will automatically create when opening such a file.

From Apple’s docs comes this info on how UTIs and document types are related:

A document type usually has a one-to-one correspondence with a particular file type. However, if your app treats more than one file type the same way, you can group those file types together to be treated by your app as a single document type. For example, if you have an old and new file format for your application’s native document type, you could group both together in a single document type entry. This way, old and new files would appear to be the same document type and would be treated the same way.

The legacy method for mapping files to documents used CFBundleTypeExtensions to specify which file extensions an app could open. According to the Info.plist Key Reference this key (which only exists for OS X apps) has been deprecated as of OS X 10.5. It still works if you don’t specify the LSItemContentTypes for a document, but will be ignored if you do. I guess Apple had simply forgotten about them and that extensions for types are now defined via the UTIs.

iOS is much cleaner in this respect, as most of the legacy keys are not even supported by the UTI definition form.

Programming against UTIs

If you are working with files you might need to find the correct UTI for a given “Tag”, i.e. MIME type or file extension.

// get the UTI for an extension

NSString *typeForExt = (__bridge NSString *)UTTypeCreatePreferredIdentifierForTag(kUTTagClassFilenameExtension, CFSTR("jpg"), NULL);

// "public.jpeg"

// get the UTI for a MIME type

NSString *typeForMIME = (__bridge NSString *)UTTypeCreatePreferredIdentifierForTag(kUTTagClassMIMEType, CFSTR("application/pdf"), NULL);

// "com.adobe.pdf" |

Other possible “tags” to retrieve a UTI for are kUTTagClassNSPboardType for pasteboard types and kUTTagClassOSType for the legacy HFS OSType.

The other direction is also possible. Say you need the MIME type for uploading a given file:

// get the MIME type for a UTI

NSString *MIMEType = (__bridge NSString *)UTTypeCopyPreferredTagWithClass(CFSTR("public.jpeg"), kUTTagClassMIMEType); |

Note that not all UTIs have a registered MIME type. Many do, but even more don’t. So you want to fall back to “application/octet-stream” if this function returns nil.

A couple more methods exist for snooping around in the UTI system.

// get the bundle URL who defined this

NSURL *URL = (__bridge NSURL *)UTTypeCopyDeclaringBundleURL(CFSTR("public.jpeg"));

// result: /System/Library/CoreServices/CoreTypes.bundle/

// get the description name of a UTI

NSDictionary *dict = (__bridge NSString *)UTTypeCopyDescription(CFSTR("com.adobe.pdf"));

// result: "Portable Document Format (PDF)"

// get the declaration of a UTI

NSDictionary *dict = (__bridge NSString *)UTTypeCopyDeclaration(CFSTR("com.adobe.pdf")); |

The latter showing exactly how Adobe’s PDF UTI is set up:

{

UTTypeConformsTo = (

"public.data",

"public.composite-content"

);

UTTypeDescription = "Portable Document Format (PDF)";

UTTypeIconFiles = (

"PDF~iphone.png",

"PDF@2x~iphone.png",

"PDFStandard~ipad.png",

"PDFStandard@2x~ipad.png",

"PDFScalable~ipad.png",

"PDFScalable@2x~ipad.png"

);

UTTypeIdentifier = "com.adobe.pdf";

UTTypeTagSpecification = {

"com.apple.nspboard-type" = "Apple PDF pasteboard type";

"com.apple.ostype" = "PDF ";

"public.filename-extension" = pdf;

"public.mime-type" = "application/pdf";

};

}

So if you know the UTI or any of its tags then you can get a little bit of extra info and transfer from tags to the UTI and vice versa. I would have wished for a way to list all UTIs that conform to a generic type, but such a function is not available in UTTypes.h where all these mentioned utility functions are defined.

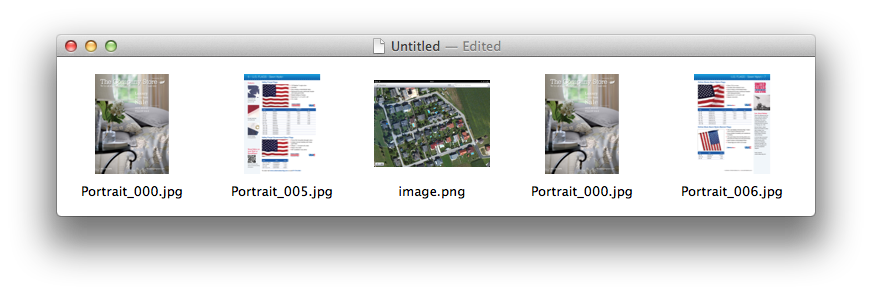

Case Study: Multiple UTIs for one Document Type

You know, I could laugh. When I dig into a topic I have a knack of discovering problems. A lesser man would probably give up. I find it fascinating. In this chapter I’ll sum up a few things I learned configuring the file types for my new iCatalog Editor app.

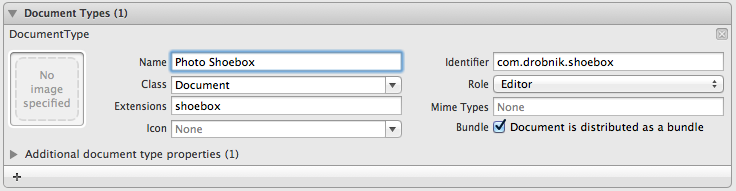

Previously created iCatalogs would all have a .bundle extension because back when I spec’ed it I liked that the default on Mac is to not show contents of packages making it simpler to move around. For the future I wanted to create my own UTI “com.drobnik.icatalog” with an .icatalog extension. Though I wanted to still be able for the app to open the bundle files. For writing all new bundles should use the new type. I didn’t want to mess with redefining all bundles.

<key>CFBundleDocumentTypes</key>

<array>

<dict>

<key>CFBundleTypeIconFile</key>

<string>CatalogDocumentIcon</string>

<key>CFBundleTypeName</key>

<string>iCatalog Bundle</string>

<key>CFBundleTypeRole</key>

<string>Editor</string>

<key>LSItemContentTypes</key>

<array>

<string>com.drobnik.icatalog</string>

<string>com.apple.bundle</string>

</array>

<key>LSTypeIsPackage</key>

<true/>

<key>NSDocumentClass</key>

<string>DTCatalogDocument</string>

</dict>

</array>

… and in line with what I mentioned above an UTI export for my own type as well as an UTI import for the bundle type. Though I think you can also omit the import because the com.apple.bundle type should be present on every system that this app will ever run. But better safe than sorry… again many thanks to Stefan Vogt.

<key>UTExportedTypeDeclarations</key>

<array>

<dict>

<key>UTTypeConformsTo</key>

<array>

<string>public.composite-content</string>

<string>com.apple.package</string>

</array>

<key>UTTypeDescription</key>

<string>iCatalog Bundle</string>

<key>UTTypeIconFile</key>

<string>CatalogDocumentIcon</string>

<key>UTTypeIdentifier</key>

<string>com.drobnik.icatalog</string>

<key>UTTypeTagSpecification</key>

<dict>

<key>public.filename-extension</key>

<array>

<string>icatalog</string>

</array>

</dict>

</dict>

</array>

<key>UTImportedTypeDeclarations</key>

<array>

<dict>

<key>UTTypeConformsTo</key>

<array>

<string>com.apple.package</string>

</array>

<key>UTTypeDescription</key>

<string>Apple Bundle</string>

<key>UTTypeIdentifier</key>

<string>com.apple.bundle</string>

<key>UTTypeTagSpecification</key>

<dict>

<key>public.filename-extension</key>

<array>

<string>bundle</string>

</array>

</dict>

</dict>

</array>

So far the only thing special about this setup are the 2 UTIs present in the document type. This allows the app to open either one.

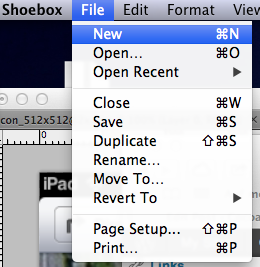

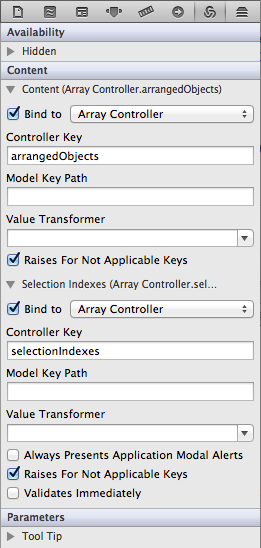

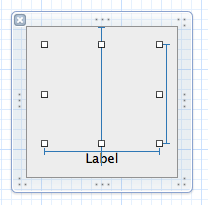

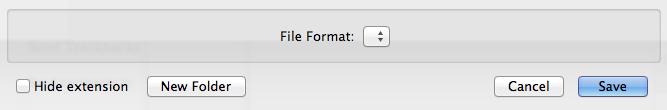

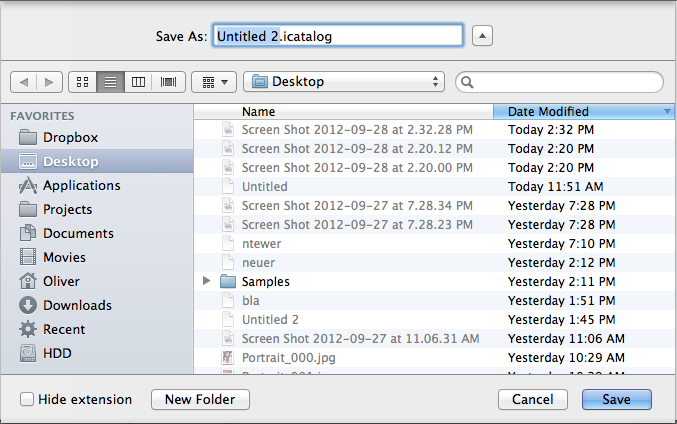

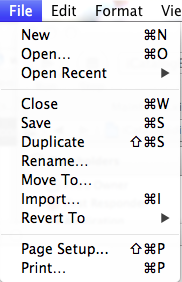

A problem became apparent when I tried to save a new document. In the save panel I would see a File Format box, but with no text. On clicking the triangle I would see my document type descriptive name:

![Empty File Format box]()

![My own type pops up]()

I lost many hours on this trying to figure out how to fix this.

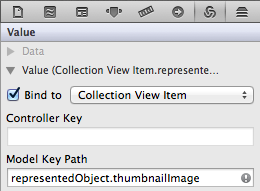

The first solution that came to mind was to actually separate the two formats into two distinct document types. Save Panels show all the document types that you have your app defined to be an Editor for. With the following setup it can save both formats.

<key>CFBundleDocumentTypes</key>

<array>

<dict>

<key>CFBundleTypeIconFile</key>

<string>CatalogDocumentIcon</string>

<key>CFBundleTypeName</key>

<string>iCatalog Bundle</string>

<key>CFBundleTypeRole</key>

<string>Editor</string>

<key>LSItemContentTypes</key>

<array>

<string>com.drobnik.icatalog</string>

</array>

<key>LSTypeIsPackage</key>

<true/>

<key>NSDocumentClass</key>

<string>DTCatalogDocument</string>

</dict>

<dict>

<key>CFBundleTypeIconFile</key>

<string>CatalogDocumentIcon</string>

<key>CFBundleTypeName</key>

<string>iCatalog Bundle (bundle)</string>

<key>CFBundleTypeRole</key>

<string>Editor</string>

<key>LSItemContentTypes</key>

<array>

<string>com.apple.bundle</string>

</array>

<key>LSTypeIsPackage</key>

<true/>

<key>NSDocumentClass</key>

<string>DTCatalogDocument</string>

</dict>

</array>

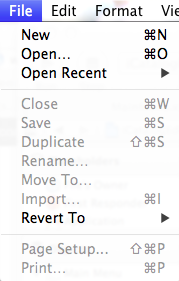

This of course results in both types showing up on the save panel which is not what I want.

![Multiple Save Formats]()

BTW that only works because I named the second format differently in the CFBundleTypeName. If you name them the same, then you get the exact same empty box problem shown above.

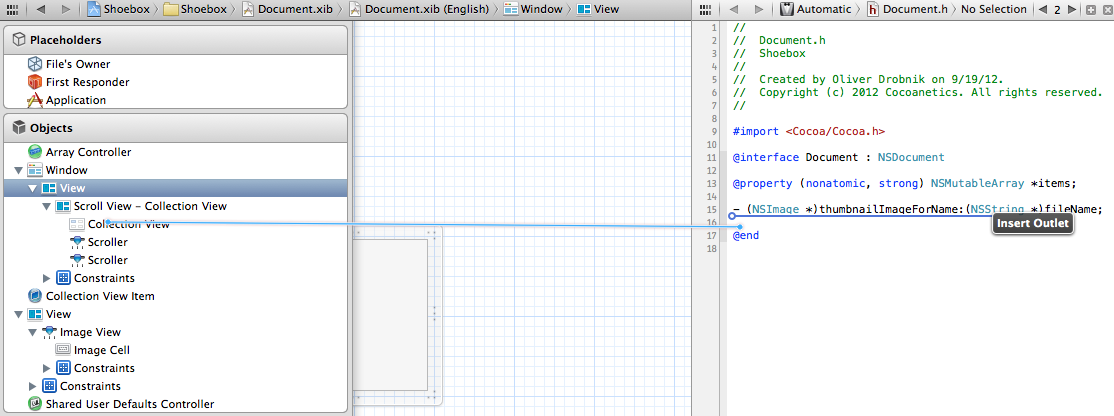

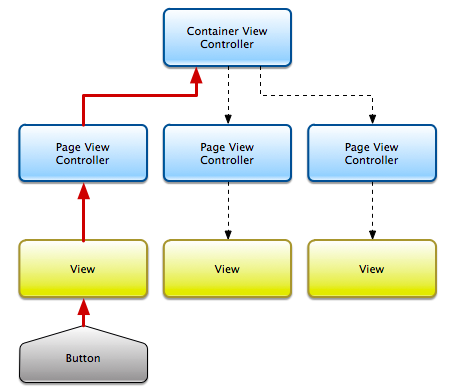

I don’t want the user to be able to create bundles, so the next logical step was to change the CFBundleTypeRole to Viewer for the bundle document type. And this fixes the problem.

![No file format chooser]()

With only one document type claiming the role of Editor the save panel does not bother to show the File Formats drop-down.

The root cause of the empty format box seems to be that document types need to have a unique display name. If two types have the exact same string then OS X bugs out. If you have multiple document types defined the fix is simple to make, but if you go with my original goal of having only one document type with two UTIs you need to touch code instead.

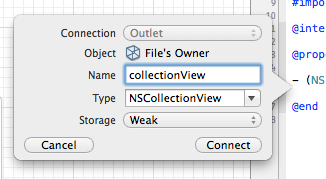

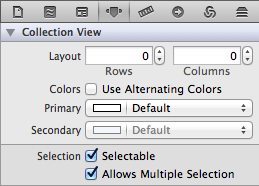

OS X has a shared NSDocumentController which determines the name to display for a given file type. To override it you create your own sub-class of NSDocumentController and instantiate it somewhere. Basically the first such class to be instantiated (either in code or from a NIB) wins and becomes the shared document controller.

@implementation DTDocumentController

- (NSString *)displayNameForType:(NSString *)typeName

{

// return something usefully unique

return typeName;

}

@end |

In this experiment I returned the typeName (UTI) for each display name. And again this worked displaying the UTIs in the Save Panel.

Even with the single document setup with 2 UTIs displayNameForType: is queried for each UTI. Since both get the same default display name from the info.plist we again see the bug. So we could easily remedy this and for example append the extension in brackets or name them differently. But this still does not allow us to have our app only be Editor for our own type.

There is another piece of code you can override. NSDocument has a default implementation of +writableTypes which returns all UTIs of all Editor document types. The advantage of overriding it there is that we already have our own NSDocument sub-class and don’t need to mess with NSDocumentController.

+ (NSArray *)writableTypes

{

return @[@"com.drobnik.icatalog"];

} |

With this little bit of code in my document class I also avoid a File Format selection, faulty or otherwise.

Conclusion

There are many things you can do wrong when defining file types and document types on OS X. When googling I could only find some well written guides by Apple, but almost nothing in terms of entry-level tutorials.

To recap: Define your own file types via exported UTIs, file types owned by other apps you should add as imported UTIs and then add the document types defining the icons, names etc referencing these. And omit the legacy stuff that you find some people still having in their mailing lists posts from many years ago. UTIs are the way to go.

Big thanks to Stefan Vogt for helping me a long way towards understandings UTIs.

![flattr this!]()